We know how to learn, but we don’t know how to un-learn, and how to forget. If you’ve seen the movie Borat, and seen him wrestle with Azhamat Bagatov, it becomes an image that you’d rather not remember. You might have forgotten, but simply reading about it might bring back the grotesque images in your mind. You’re welcome. If you haven’t seen this, and I don’t recommend seeing it, be forewarned that it is NSFW in an ugly way.

When we’re exposed to something disgusting, we have no way of cleansing our minds of it. Even though we might have pushed the memory away from conscious thoughts, and leave it to slowly decay away, the simple mention of it might invokes it back. It wasn’t something we’ve forgotten, it’s something that you swept aside, and let decay like a radioactive element.

Our brains don’t have a “Recycle Bin” to empty the way a computer can dispose of unwanted files. Deep neural networks, whether made of fat in our heads, or made of silicon and abstractions on computers, are not good at forgetting information they’ve seen.

Let me talk a bit more about the “artificial” ones first, and I’ll revisit the biological neurons later.

When deep learning network sees data, it adjusts its model weights to incorporate the new data into a probability distribution, representing all data seen so far. And that process is only additive. As the network keeps on seeing more and more data it incorporates more and more things into the distribution of the data. Deep learning, like other Machine Learning, works by transforming the statistical distribution of the data, and not by having a semantic understanding of what’s happening. When deep learning model can differentiate between images of cat’s and dogs, it just has learned that the pixels of the image are more likely to come from the distribution of the cats’ images, than the one of the dogs’ images. It has no idea that these are animals.

But also, if we trick the model by telling it that bunch of images of red flowers are images of cats, it will take long until it can un-learn that anything reddish, or flower-like is not a cat..

To understand why we’d need to go a little bit deeper into how deep learning models work. The way deep learning works specifically represent that distribution of cats images is to take the multi dimensional space of the image - one dimension for each pixel and color channel per pixel, and to do a series of transformations on it. The transformations are a series of linear transformations, followed by some nonlinearity.

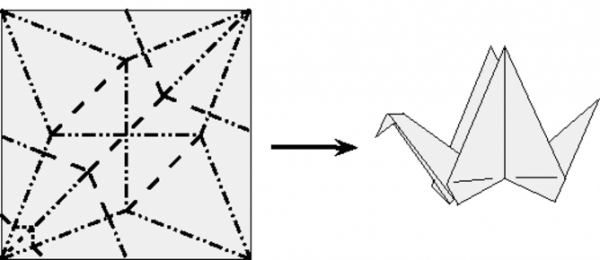

It’s like if you have a piece of paper, and you can iteratively rotate it around(linear), move it around(again linear), and fold it(non-linear). The linear transformations don’t necessarily change the paper, but the folding does, because now suddenly, points on that paper which were far from each other are close to each other.

And the paper is thick - not just two dimensional, but many, many dimentional.

When the model learns it right Sometimes it looks like a beautiful origami.

Sometimes it looks like messy ball.

If you’ve got a ball, and you want to make it pretty, how do you do it? It’s not clear.

The paper has deformation memory. Many of the creases cannot be ironed out. It the brain, creases are created by trauma, learning the wrong things, or brainwashing. You could be a mathematician trying to understand concepts you thought impossible, like a point connected to every other point. You could be a person who moved to a different country or town, where suddenly people are a lot more direct in expressing disagreement. You could be someone who’s always been dependent on institutions to provide, and be now trying to make it on your own. You could have biases and superstitions. You might have gaps in the understanding of basis, and struggling to understand the higher level concepts.

It’s always hard, and you can never adapt 100%. And the creases are like structural fractures, they tend to spread in other related areas. It’s a struggle, but I’m an optimist, because I’ve heard stories about people turning their life around from negative to the positive.

The change can be gradual and gentle, or more disruptive. Disruptive change would iron out the whole paper, lay it out flat, and then start over, trying to re-learn everything from scratch, the right way. It is quite hard to do in practice, on a large scale. We’d have to forget everything. Everything. We just won’t be able to operate, if it wasn’t for the all the things we’ve learned. I think the disruptive approach doesn’t really work, unless you’re in extreme situation to begin with.

A more gradual change would be to iron out parts at a time, and re-fold them. Take each small part from negative to positive. When done correctly creates a non disruptive adaptation, and gradually improves your shape. It might be easy to make progress initially, but this strategy only scales so far. That is, because it is hard to fold segments of the paper in isolation, when they connect to other parts of the paper. In the limit case you’ll end up with a Frankenstein of an origami which is half bird, half messy ball. You can have some really sharp and polished skills and knowledge, but be very inept in some other situation.

Some people can be really good with computers, and bad with people. Or vice versa. Some really smart people are extremely dumb. As someone who’s been associated with smart-sounding things like mathematics and computer science and who’s had a good career in both, yet made plenty of dumb actions outside of those, I implore you:

Don’t underestimate the capacity of “smart” people, and experts, to be dumb, even as related to their areas of expertise.

Each smart person has parts of their origami neat and clear, but other parts messy. It’s the natural place where we all end up, because we specialize in certain areas, and accumulate gaps and ignorance in others.

Improving the Frankenstein origami is a hill-climbing optimization, that will get stuck in some local optimum, and yet and it has plenty of value for us.

When we get stuck we cannot re-fold any area without disrupting others. That’s where I think we can apply a separate approach. I think the approach is to define values and principles which guide our decisions and thinking and start applying them to situations we’ve learned and internalized, but are in disagreement.

In other words, create our own creases in the paper and start propagating them. When we apply them in all our experiences, they become a blueprint, for our lives and minds, the way origami has a blueprint.

We start enforcing the creases one at a time. To develop a principle or value, it’s like temporary unfolding the whole paper, and then creating the fold in it at the right time, then putting it back into the shape that it was. Except that we can’t afford to unfold the paper, and need to start applying the crease from the side, and propagate it locally. Over time, as we start adjusting our actions towards the new principle, we’re slightly altering, or disrupting activities and skills which depended on the previous, wrong setup. Even though it’s uncomfortable, we are getting closer to the right shape.

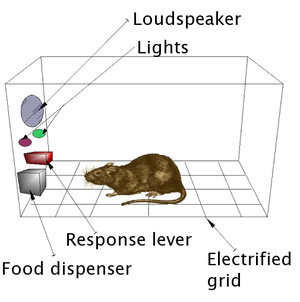

The discomfort comes from our conditioning. We spend our lives in Skinner boxes conditioning us to chase after American dream, money, social status, likes on social media, sugar in our food, the enjoyment of watching fun shows, pictures of cats and dogs. Alcohol addiction, cigarettes addiction, drugs addiction, porn addiction, even exercise addiction. And to run away from things based on fear from cancer, fear from “other” people, fear from people who have different cultures, or beliefs, or political party. Fear of rejection.

Fears and rewards, fears and rewards, they shape our brains into messy paper balls, full of prejudices and bad habits. Like stupid rats, we are conditioned to respond to certain foods, messages, and sounds, and build maze-running habits.

They leave precious little space for appreciation and love.

Our way out is to bring out the pitbull and chase and chase the rats in our brains until they run far away and evolve. We need the strong conviction of the pitbull, and the humility of the rats.